16 Jan 2019

ShapeStacks: Giving Robots a Physical Intuition

TLDR: Physical intuition is a human super-power. It enables our superior object manipulation skills which we apply in countless scenarios – from playing with toys to using tools. The ShapeStacks project aims to equip robots with a similar intuition by providing a virtual playground in which robots can acquire physical experiences.

Most of us have probably played a game of Jenga before, dealt with stacks of dirty dishes in their kitchen or used a hammer to hit a nail into a piece of wood. The inherent complexity of these simple everyday tasks becomes immediately clear when we try to build a machine which is capable of doing the same thing. Robots are essentially computers integrating sensing, locomotion and manipulation. And like all computers, they are first and foremost fast and exact calculators. Yet, despite their number-crunching powers (which allows them to perform complex physical and geometric calculations in split-seconds) they still largely struggle with basic object manipulation. Humans on the other hand, possess only a tiny fraction of the arithmetic exactitude of a computer, but are still capable of “computing” accurate movements of their limbs (e.g. swinging a hammer on a nail’s head) and estimates of the physical states of the objects around them (e.g. judging the stability of a stack of dishes).

Researchers in cognitive science have been investigating our perception of the physical environment for some time, e.g. [1, 2, 3], but the exact inner workings of this extraordinary human capability remain elusive. Hence, in-lieu of a more precise description, we commonly refer to it as physical intuition. Physical intuition is equally intriguing from a roboticists point of view, since it may lead to a variety of skills our robots are currently lacking: From rapid assessment of unfamiliar situations to dexterous manipulation of objects and even creative use of tools.

We started the ShapeStacks project to investigate physical intuition and how it can be acquired with the help of machine learning. Several papers have demonstrated the feasibility of predicting intuitive physical properties of a scene such as the stability of stacked structures [4, 5, 6] from visual input.

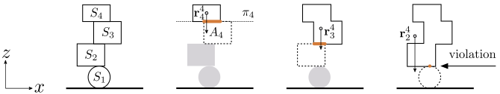

The stability of a stack can be tested by considering sub-stacks sequentially, from top to bottom. For stability, the projection of the centre of mass of each sub-stack must lie within the contact surface of the object supporting it. As shown on the right, a cylindrical or spherical object offers an infinitesimally small contact surface which does not afford stability.

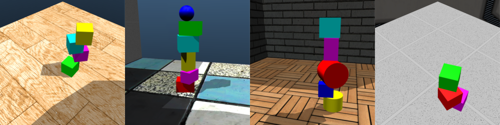

Inspired by that prior work we wanted to create a virtual environment which produces physical experiences which can be fed as training data into neural networks. We used the MuJoCo [7] physics simulation engine to create different scenarios of object stacks, consisting of elementary geometric shapes like cuboids, cylinders and spheres with varying sizes and aspect ratios. When generating the stacks, we paid careful attention to the physics governing their stability, especially the centre of mass criterion (CoM). We deliberately chose when and where the centre of mass criterion should be violated. By doing this, we ensured that the training dataset features a balanced variety of scenarios, e.g. as many stable scenarios as unstable ones and unstable scenarios collapsing at different layers.

An overview over scenarios from our ShapeStacks dataset. We have created about 20,000 virtual object stacks with randomised colours, textures and lighting conditions and let a neural network observe them in order to acquire an intuition about the stability of stacks of rigid bodies.

We trained an Inception-v4 [8] based visual classifier on initial images of stacks to predict a binary stability label with a logistic regression. We achieved about 85% classification accuracy on our held-out test set. We also tested our classifier on a publicly available dataset of real world stack tower images [5] and immediately achieved about 75% accuracy without any fine-tuning on real-world images.

In follow-up experiments we inquired whether the network based its predictions on a plausible physical intuition. We started off by visualising the importance our network assigns to certain regions of an input image when making a prediction. We find empirically, that our network especially looks for non-planar support surfaces and overhanging objects when predicting that a stack is unstable. This is in line with human intuition and roughly corresponds to the visual implications of the centre of mass principle, although an exact CoM is never computed by the network.

In the heatmap on the right we visualise the image regions our network pays most attention to when predicting that a stack is unstable. We find that in about 80% of all cases, our network correctly attends to the region of stack where the collapse starts.

Next, we investigated whether the network can compute a good stacking pose for an object based off the stability prediction. Therefore we constructed the following proxy task: We rotated the object under investigation underneath a larger box and predicted the stability of the observed scene. We used the anticipated stability as an indicator of an object’s ‘stackability’, i.e. how much support it can provide in a certain orientation.

We visualise the provided support of the lower object in different poses. A red colour indicates that the current pose is unsuitable for building a stable stack, the green colour indicates that the pose provides stable support for the object on top.

Lastly, we gauged how useful the stability predictions are in an artificial manipulation task. We created new scenarios with randomised objects and tried to assemble them into a stable stack using only the trained stability predictor. We started off by computing suitable stacking poses and ranked all objects according to their stackability scores with the support proxy experiment. More stackable objects should be placed first, lesser stackable ones last. Then we sampled positions for the next object to be stacked and evaluated the stability of the resulting tower with our prediction network.

The given objects are first oriented and ranked according to their stackability. Then they are stacked from most stackable to least stackable. Stacking positions are sampled in a simulated annealing fashion: For each sampled position the stability of the resulting tower is predicted and the object is continuously moved to the next most stable position in its vicinity until the process converges to the final position.

In the simulated stacking experiments, our model builds towers with a median height of eight pieces. This exceeds the maximum height observed during training (the training dataset only features stacks with up to six objects) and serves as a proof-of-concept that the learned intuition about structural stability can be employed successfully in a manipulation task.

Although this current application of physical intuition is still confined to a computer simulation, we continue to improve on the current approach and aim to deploy a more refined version of this system on a robot to solve puzzle and manipulation tasks in the real world. We have also published our current results, source code and dataset to allow fellow researchers and tinkerers to build upon our findings and train new models in the ShapeStacks environments. We hope that physical intuition enables robots one day to carry out more complex manipulation tasks – from handling dirty dishes to using tools – or at least enables them to compete with us in a game of Jenga.

References

[1] Peter W. Battaglia, Jessica B. Hamrick, and Joshua B. Tenenbaum: Simulation as an engine of physical scene understanding. PNAS November 5, 2013

[2] Hamrick JB, Battaglia PW, Griffiths TL, Tenenbaum JB.: Inferring mass in complex scenes by mental simulation. Cognition. December, 2016

[3] James R. Kubricht, Keith J. Holyoak, Hongjing Lu: Intuitive Physics: Current Research and Controversies. Trends in Cognitive Sciences. October 1, 2017

[4] Jiajun Wu, Illker Yildirim, Joseph J. Lim, William T. Freeman, Joshua B. Tenenbaum: Galileo: Perceiving Physical Object Properties by Integrating a Physics Engine with Deep Learning. Advances in Neural Information Processing Systems 28 (NIPS 2015)

[5] Adam Lerer, Sam Gross, Rob Fergus: Learning Physical Intuition of Block Towers by Example. ICML’16 Proceedings of the 33rd International Conference on International Conference on Machine Learning

[6] Jiajun Wu, Erika Lu, Pushmeet Kohli, Bill Freeman, Josh Tenenbaum: Learning to See Physics via Visual De-animation. Advances in Neural Information Processing Systems 30 (NIPS 2017)

[7] Emanuel Todorov, Tom Erez and Yuval Tassa: MuJoCo: A physics engine for model-based control. 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems

[8] Christian Szegedy, Sergey Ioffe, Vincent Vanhoucke, Alex Ameni: Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. AAAI 2017