Soft Robotic Hand with Tactile Sensing - Grasping objects for object identification

Soft Robotic Hand with Tactile Sensing

Grasping objects for object identification

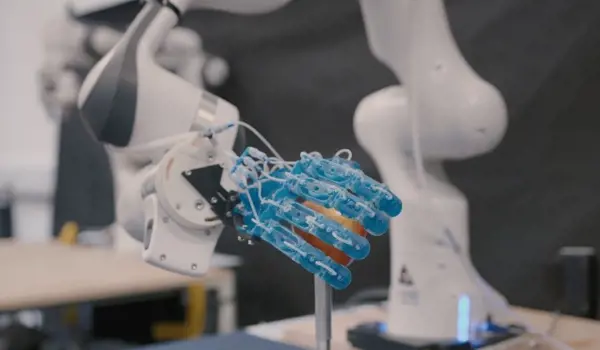

In this video, we use the hand to grasp a cup from a wide range of poses. We acquire tactile data from the distributed pneumatic sensors. As more grasps are taken, the belief distribution output of the trained network is shown. Initially, the object is wrongly classified, but as subsequent grasps are taken, the correct classification is achieved.

As the field of soft robotics continues to evolve, there is a growing interest in developing robots that can interact with their environment in a more natural and intuitive way. One area of focus is creating soft robotic hands that can mimic the dexterity and sensitivity of a human hand. We have designed a multi-material 3D printed soft robotic hand with integrated tactile sensing. The hand is able to grasp a wide range of objects and identify those objects using the distributed pneumatic tactile sensing.

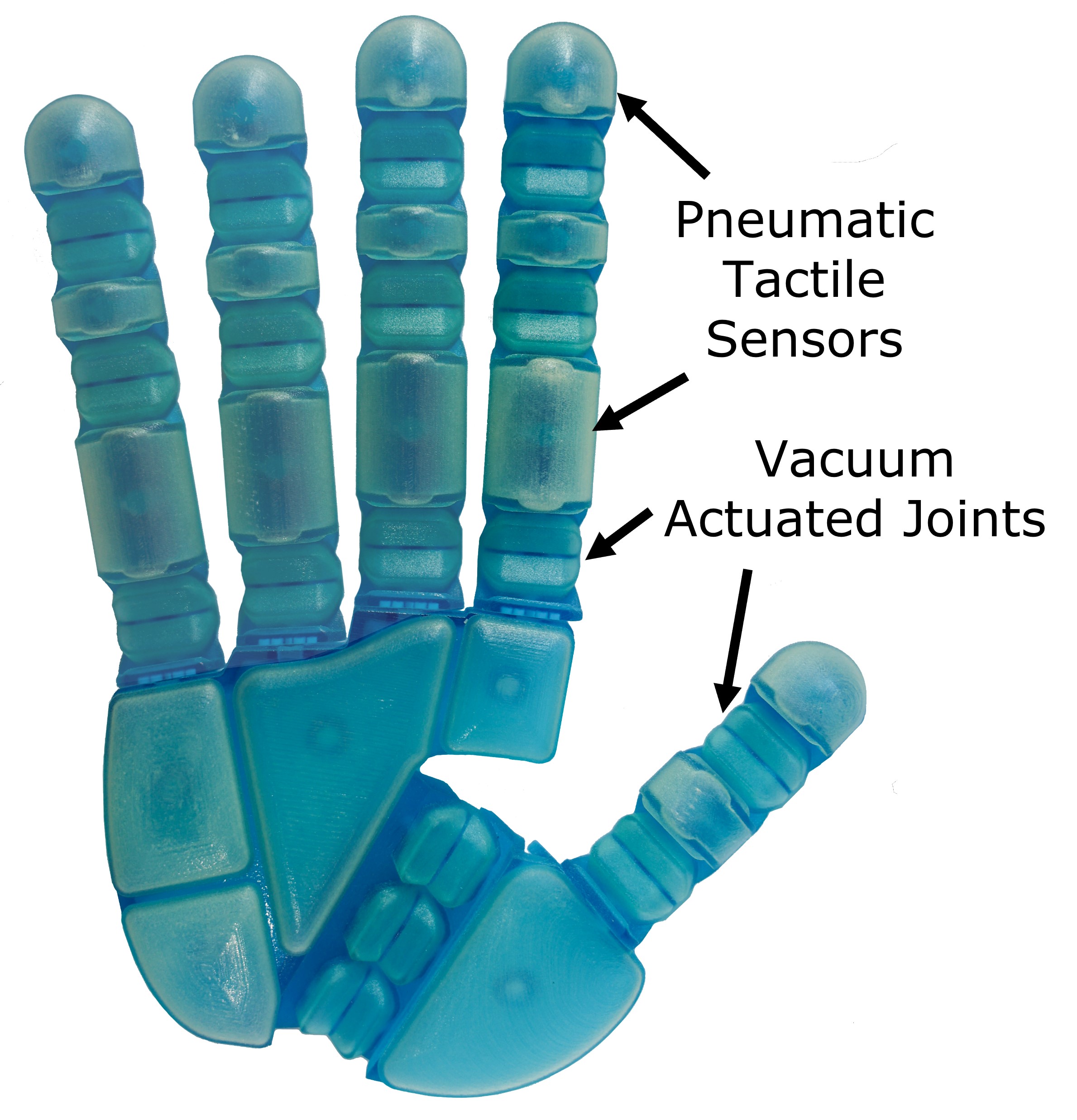

Multi-material 3D Printing

The multi-material 3D printing allows us to carefully allocate material behaviours to each section of the hand. For example, we require compliance in the sensors and joints, but rigidity in the 'bone' pieces to provide structure. Printing the hand monolithically also dramatically reduces the complexity of fabrication and increases the repeatability between samples.

Exploiting Compliance

By designing the hand to have compliant joints, we are able to apply a single internal pressure to each joint and the hand is still able to hold a set of diverse objects. This is because the hand can naturally conform to the shape of the hand without requiring positional feedback. In effect, we have abstracted the complexity of the grasp task away from needing a complex control scheme and into the materials themselves. This concept is known as 'Computational Morphology'. Additionally, by designing the sensors to be soft, we can ensure 'safe' contact with objects.

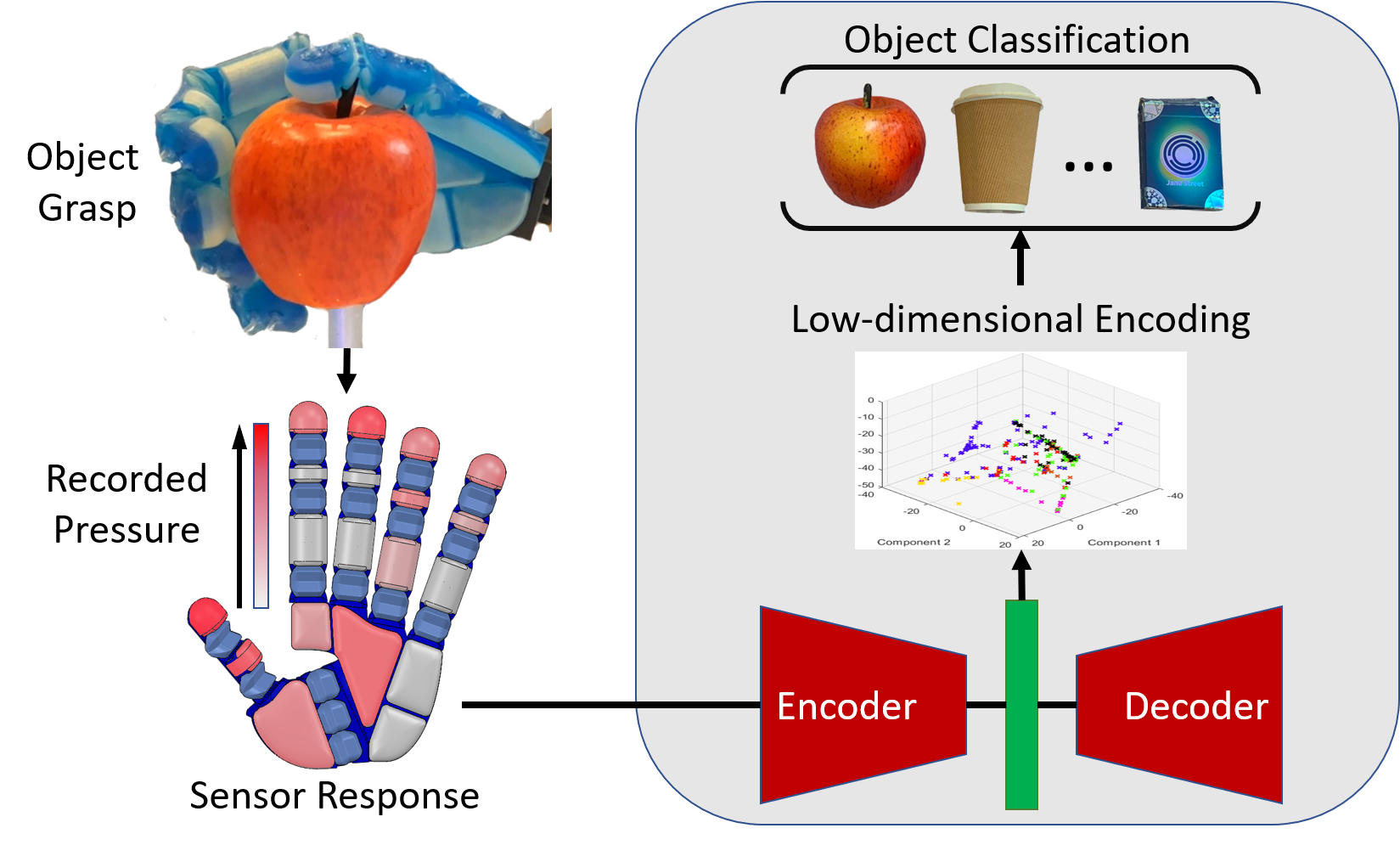

Tactile Object Identification

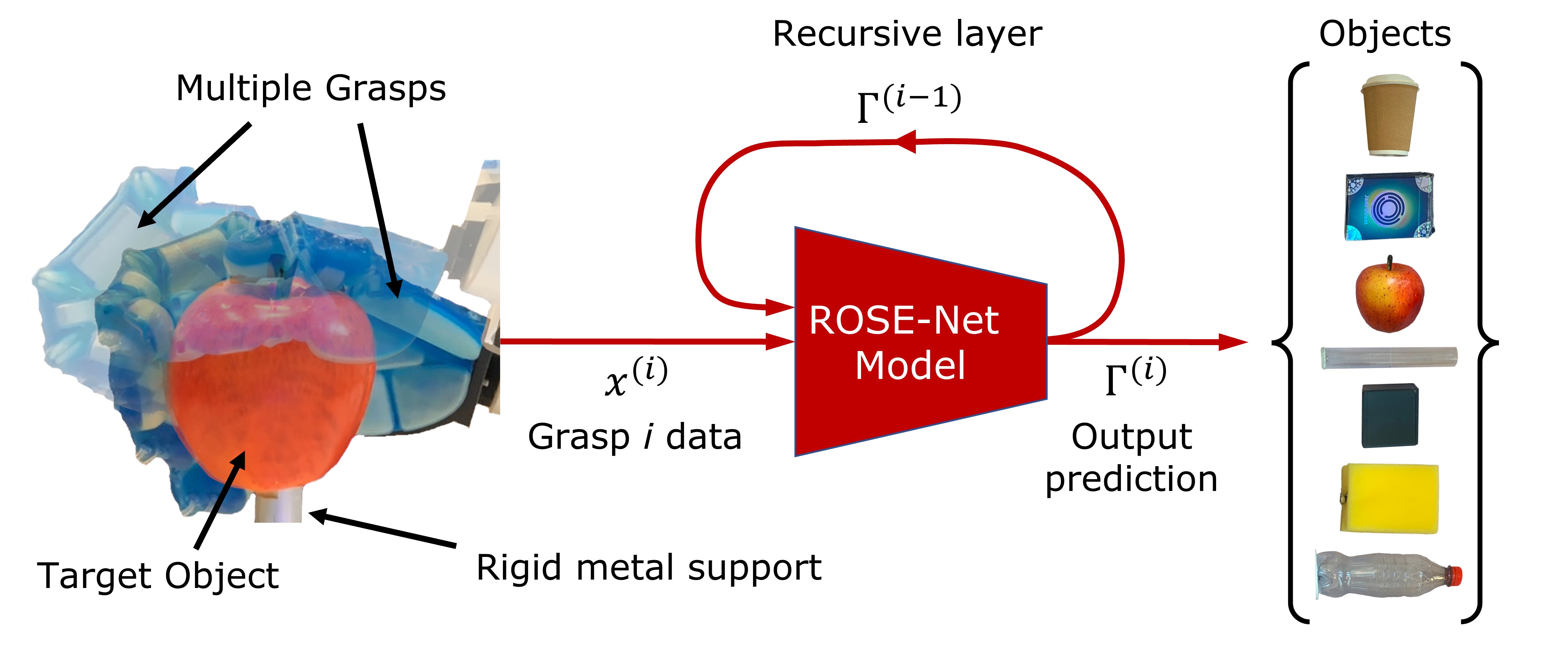

Using the tactile data acquired from grasping the objects, we have successfully applied object identification using neural networks. However, we wanted to implement a system that takes inspiration from how humans take multiple touches and grasps of the same object to build up a physical representation of that object. Therefore, we devised a multi-grasp scheme that uses the results from multiple grasps of the same object to inform the object identification. We have found this to produce higher accuracy results.

The Monolithic Multi-Material 3D Printed Soft Hand

The EDAMS Model for Multi-Grasp Object Identification

The ROSE-Net Model - Simulating a Bayesian Framework