Generation of tactile data using deep learning

Generation of tactile data using deep learning

Tactile perception is key to robotics applications such as manipulation and exploration. However, collecting tactile data is time-consuming, especially when compared to visual data acquisition. Additionally, due to the different sensing technologies involved, the collected tactile datasets are usually sensor specific. These constraints limit the use of tactile data in machine learning solutions to robotics applications.

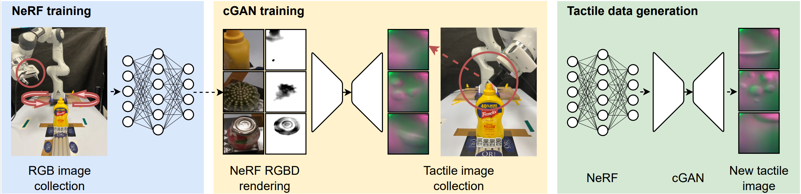

In this paper, we propose a generative model to simulate realistic tactile sensory data for use in downstream robotics tasks. The proposed generative model takes RGB-D images as inputs, and generates the corresponding tactile images as outputs. The model further leverages neural radiance fields, a neural network that ‘remembers’ the contents of a scene, to render RGB-D images of the target object from arbitrary view angles for tactile image synthesis. The experimental results demonstrate the potential for the proposed approach to generate realistic tactile sensory data and augment manually collected tactile datasets. In addition, we demonstrate that our model is able to transfer from one tactile sensor to another with a small fine-tuning dataset.

You can find our paper here.