Goal Oriented Autonomous Long Lived Systems Research at the Oxford Robotics Institute

Mission Planning for Autonomous Systems

Mission Planning for Autonomous Systems

The Goal-Oriented Long-Lived Systems (GOALS) Lab performs research around the problems of behaviour generation for autonomous systems. In particular, we focus on long-term autonomy and task and mission planning for mobile robots which must operate for extended periods (days, weeks or months) in dynamic, uncertain environments. To create long-term autonomous behaviour we explore the application of artificial intelligence and formal methods to robots. We focus on the use of decision making under uncertainty and machine learning, such that the longer robots act in an environment, the better they perform. We specialise in optimising and providing formal performance guarantees given rich behaviour specifications, such as temporal logics, risk-aware planning and multi-objective scenarios.

Planning for Multi-Agent Tasks

Planning for Multi-Agent Tasks

As robots become more commonplace in the real world, multiple agents must be deployed together in the same space. When doing so, it is crucial that agents effectively coordinate to complete tasks. In the multi-agent context, we consider both planning for multi-robot systems, and human-robot teams and problems of shared autonomy. We develop planning solutions for both cooperative and non-cooperative teams of agents. For cooperative teams, we explore how robots interact with each other over time, and how modelling these interactions can improve planning. For non-cooperative agents, we develop methods to coordinate multiple robots owned by different companies using game theory, in a way that is considered fair to all parties. We also investigate problems in which teams of humans must collaborate with robots. We have developed methods that can switch between autonomous robot control and the human to optimise task performance and safety.

Planning with Learned Models

Planning with Learned Models

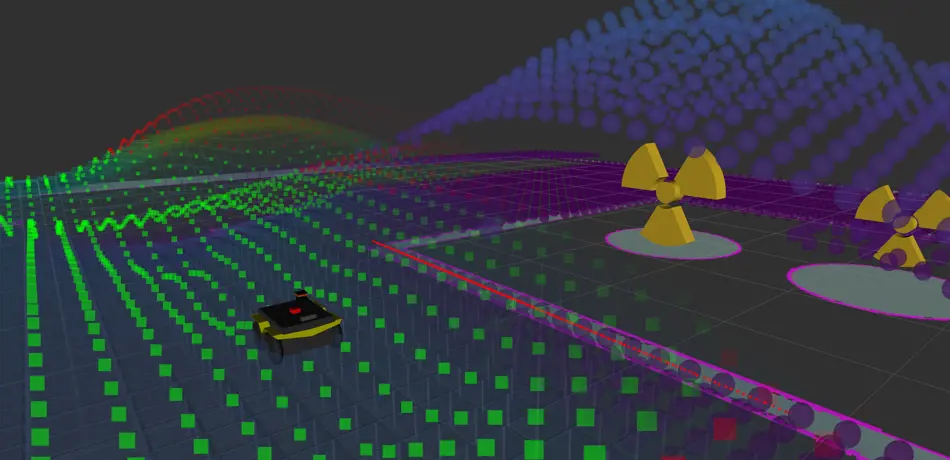

Autonomous agents that operate for extended periods of time require models of the world they operate in. In dynamic environments it is not always possible to specify representative models in advance. In this strand of work, we enable agents to update their models using observations gathered online, and explore planning techniques that are robust to inaccuracies in the learned models. As part of the RAIN Hub, we are interested in learned models that enable safe exploration of hazardous environments, such as nuclear facilities. As part of ORCA Hub, our learned models maintain uncertainty over predictions of oceanic currents. By allowing agents to consider model uncertainty in their decision-making and update their models online, we improve their ability to represent dynamic environments, and therefore their behaviour over time.