Research at the Cognitive Robotics group at the Oxford Robotics Institute

Research @ CRG

Explainable Autonomy

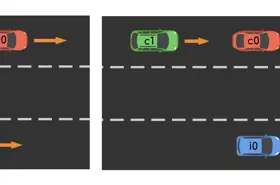

Understanding the decisions taken by an autonomous machine is key to building public trust in robotics and autonomous systems. The Sense-Assess-eXplain (SAX) project is part of the Assuring Autonomy International Programme and will design, develop, and demonstrate fundamental AI technologies in real-world applications to address this issue of explainability. The aim of the project is to build robots, or autonomous vehicles, that can: sense and understand their environment, assess their own capabilities, provide causal explanations for their own decisions. In on-road and off-road driving scenarios, we are studying the requirements of explanations for key stakeholders (users, system developers, regulators). These requirements will inform the development of the algorithms that will generate the causal explanations.

Assuring Autonomy for Opportunistic Science

Enabling rovers to autonomously detect and explore targets can increase the overall scientific outcome of extraterrestrial missions.

Within the context of planetary exploration and as part of the the European Horizon 2020 project ADE (Autonomous Decision Making in Very Long Traverses), we investigate AI & ML methods for the identification and assessment of scientific targets in high-resolution and thermal images with the aim to increase the scientific output. In particular, our proposed approach uses Variational Auto-encoders to detect scientific targets in images and assess their novelty during planetary rover missions. Linked to the above work and as part of my Programme Fellowship within the Assuring Autonomy International Programme, we are building an assurance case for the detection of scientific targets in planetary missions. The assurance case will investigate methods which will provide a justification of choices made during the network design phase as well as the data used for training and testing. Moreover, methods will provide confidence arguments for performance evaluation of machine learning algorithms.

Responsible Robotics

When deploying autonomous robots in the real world, it is absolutely critical that users, developers, and regulators understand what a system is doing, what it has done, what it intends to do, and why. As collaborators in the RoboTIPS project we are investigating the design of an “ethical black box” which allows robots to explain their own behaviour in the context of incident investigation scenarios within various domains including assistive robotics and autonomous driving.